Most People Get Headline A/B Testing Wrong. Here’s How to Do It Right.

Learn how to design smarter headline tests and get real insights.

A great headline can make or break your open rate. It’s your first, and sometimes only, chance to earn a reader’s attention.

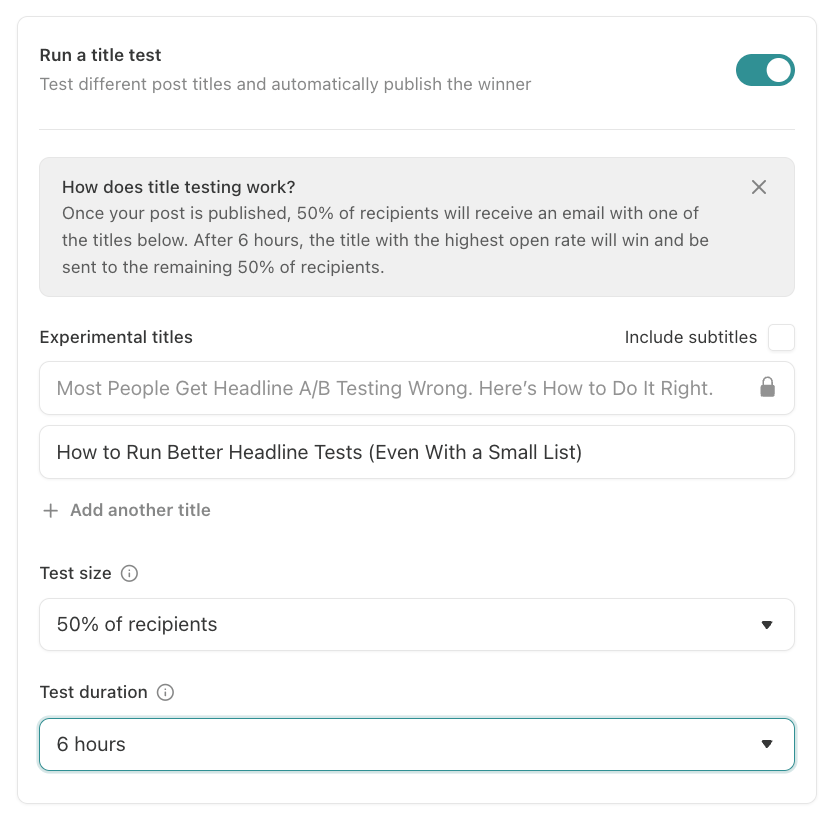

And now that Substack finally supports a/b testing, you can actually measure what works and improve your open rate with every send.

Done well, headline testing helps you:

Boost email opens (and reach more readers).

Understand what messaging resonates.

Sharpen your intuition over time.

But here’s the catch: Most people get headline testing completely wrong.

They peek at the data too early, test tiny copy tweaks that are economically meaningless, and declare winners based on noise rather than insight.

This guide will show you how to do it right, especially if you’re working with a small or mid-sized list.

4 Ways People Get Headline Testing Wrong

Substack’s a/b testing feature makes it feel like you’re running a clean experiment.

But most people end up making one of these four mistakes and walk away with misleading results (or no insights at all).

1. Testing Without a Clear Hypothesis

The best headline tests aren’t just about performance; they’re about learning.

What kind of framing works?

What structure drives curiosity?

What emotional tone does your audience respond to?

If you’re not testing toward those kinds of questions, you’re just guessing. Worse, you’re missing the chance to build a repeatable strategy.

The real value of headline testing lies in compounding: the more intentional your tests are, the more your success rate will improve over time.

2. Declaring a Winner Too Early

Open rates can swing wildly in the first hour, especially if you send early in the morning or hit a pocket of highly engaged readers. Remember, those first few clicks don’t tell the full story.

If you peek at your results 15 minutes after hitting send and one version is “winning,” don’t trust it yet. Stopping the test early actually increases your chance of finding a false positive, even if you don’t mean to do it.

Allow your test to run as scheduled and give the results time to settle.

3. Not Collecting Enough Data

Even if you wait a few hours, most newsletters don’t have enough volume for statistically reliable results. If each a/b version only gets 100–200 opens, that’s not enough to detect a real lift unless the difference is huge.

But that doesn’t mean you shouldn’t test. It means you should:

Focus on big differences, not small tweaks.

Look for directional insights, not false precision.

Avoid overinterpreting minor changes in open rate.

A “2% lift” might sound good, but if you don’t have real, strong data to back it up, it’s probably just noise.

4. Obsessing Over Tiny Changes

A lot of people use headline testing to tweak adjectives or reorder phrases, like “10 Smart Writing Tips” vs. “10 Writing Tips That Work.”

That’s fine if you have 50,000+ subscribers. But for most writers, these micro-changes won’t create a measurable difference, and they distract from testing what actually matters.

If your headline test doesn’t look meaningfully different at a glance, it’s probably not worth running.

Instead, test real contrasts:

Positive vs. negative framing.

Formal vs. conversational tone.

List vs. narrative.

Short vs. long.

Small Lists Require Big Swings

Here’s the truth most testing advice skips: The smaller your list, the bigger your changes need to be.

If you regularly get tens of thousands of opens, you can detect even minor differences between headlines (like a 1% lift in open rate) with confidence. But if your audience is smaller (or less engaged), you’ll need to test bigger swings to see what works.

That’s why most writers should focus on:

Testing bold, high-contrast ideas.

Tracking patterns across multiple tests.

Learning directionally, not chasing precision.

How to Make the Most of Headline Testing (When You Don’t Have a Huge List)

Just because you don’t have thousands of opens per test doesn’t mean you can’t learn. It just means you need to test smarter. Here’s how:

1. Test Headlines That Actually Contrast

Remember to test headlines that feel meaningfully different: emotionally, structurally, or tonally.

Don’t waste your time on “Tips for Writers” vs. “Writing Tips.” Instead, compare “5 Top Tips for Writers” vs. “Stop Writing Like This (And What to Do Instead).” Those two headlines have dramatically different tones and styles, so you’re more likely to observe a difference in open rates.

Remember, if your audience is small, don’t waste your tests on micro-optimizations. Make bold, strategic changes that have a shot at producing meaningful insights.

2. Stick to Two Variants

Substack will let you test up to four headlines, but doing so dilutes your data. More variations means smaller samples per version which also means less reliable results.

Stick to two variants at a time to increase your chances of confidently detecting a winner.

3. Maximize Audience Size and Duration

The smaller your audience, the more of it you need to include in the test, and the longer you need to let it run, to collect enough opens before the winner is picked.

Substack lets you send your test to up to 50% of your list and run it for up to 6 hours before selecting a winner. Use these maximums for the best chance of confidently detecting a winner.

4. Focus on Patterns Rather Than Individual Winners

One single test won’t tell you much. But five tests on the same theme? That’s where real insight comes from.

For example, let’s say you run a test and find that “Start Your Free Substack in 10 Minutes” generates a much higher open rate than “The Ultimate Guide to Starting a Substack.”

What was the key to the improvement? Was it the word free? The time-based promise? The sentence structure? You can’t know yet, but if you keep testing those elements in future headlines, clear patterns will emerge.

You’re not just trying to win this test, you’re trying to get smarter with every send.

Every Headline Test Is An Opportunity to Learn

Substack’s a/b testing tool makes it easy to feel like you’re optimizing, but if you’re not careful, you’re just chasing noise.

Don’t treat headline testing as a slot machine; treat it as a learning engine. Design your tests with intention, and build towards repeatable patterns.

Smart testing compounds over time. You won’t just get better headlines, you’ll become a better copywriter and a better creator.

To endless possibilities,

Casandra

🎁 Premium Bonus: 5 Evidence-Based Headline Patterns + Examples of How to Test Them

Want to test your headlines more strategically and find repeatable patterns that work again and again?

This bonus guide for Really Good Business Ideas Premium subscribers breaks down five research-backed headline patterns that can be applied to any niche. Each one includes examples of how to test it so you can measure and validate its effectiveness for your own audience.