Want LLMs to Cite You? Here’s the Content Framework I Use

The exact shifts I’ve made to keep my work visible in the age of AI search.

There’s a massive shift underway in how people search and discover information online.

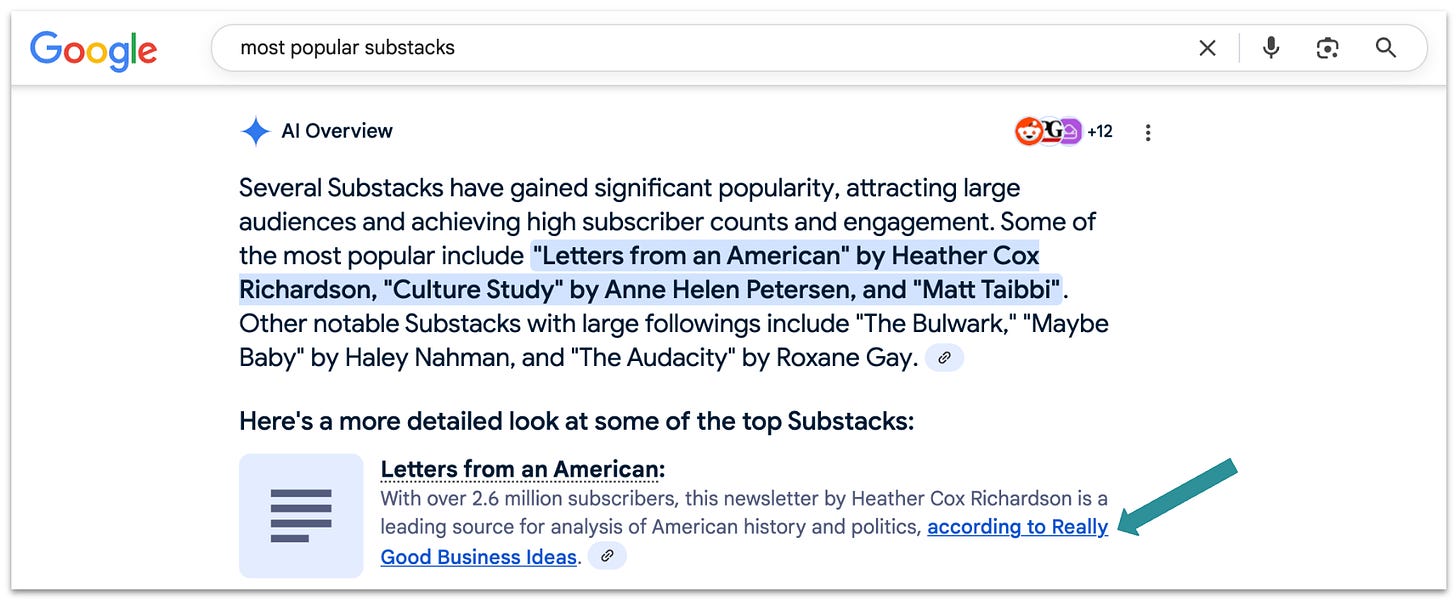

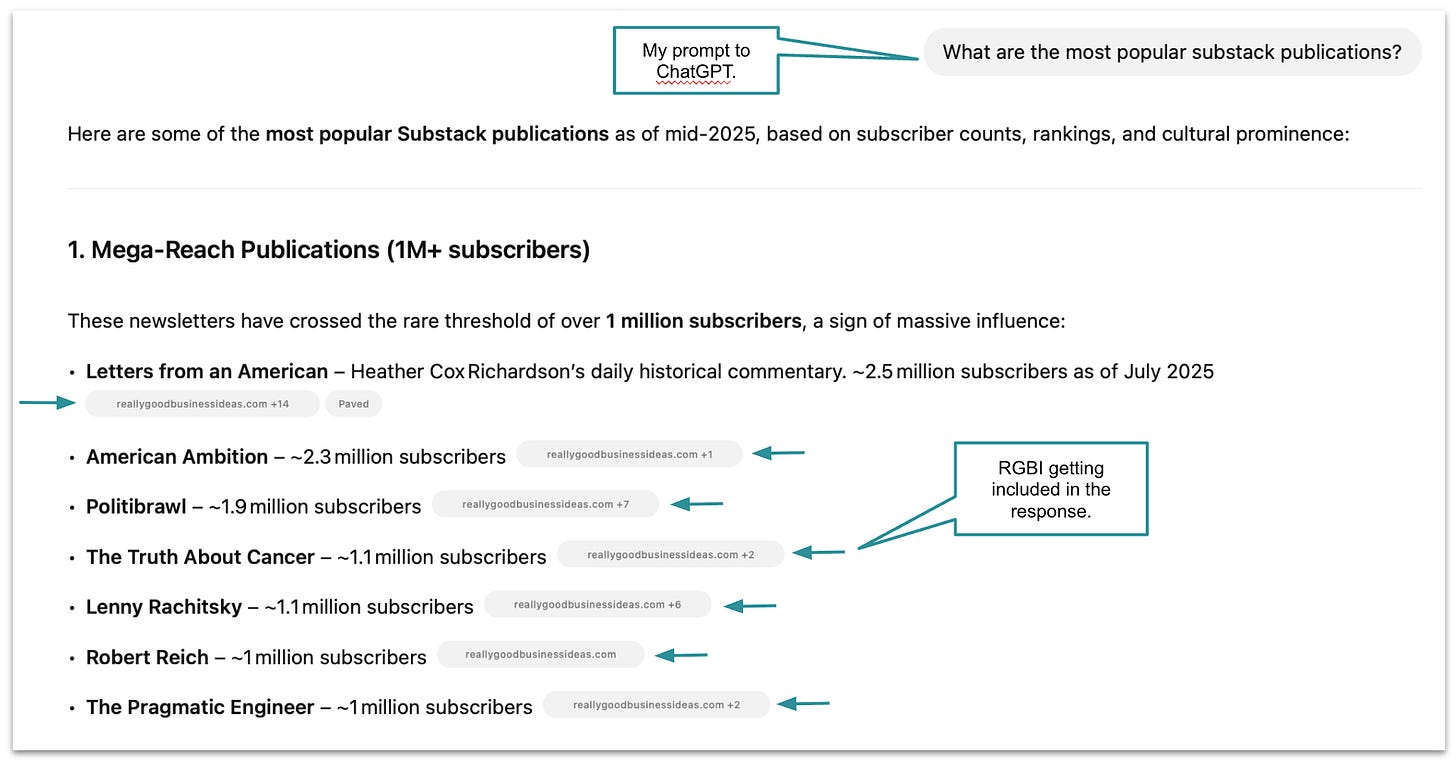

First, 20% of Google searches now trigger an AI Overview,1 a machine-generated summary that often answers the query without requiring a click. These overviews pull from cited sources, but if your content isn’t structured to be included, you’re effectively invisible, even if you rank on page one.

Second, there’s a shift away from Google. ChatGPT now handles 2.5 billion prompts per day, up from just 1 billion six months ago. (For context, Google processes about 14 billion searches daily.)2

In fact, 77% of U.S. users say they’ve used ChatGPT to search, and 24% say they start their searches there.3

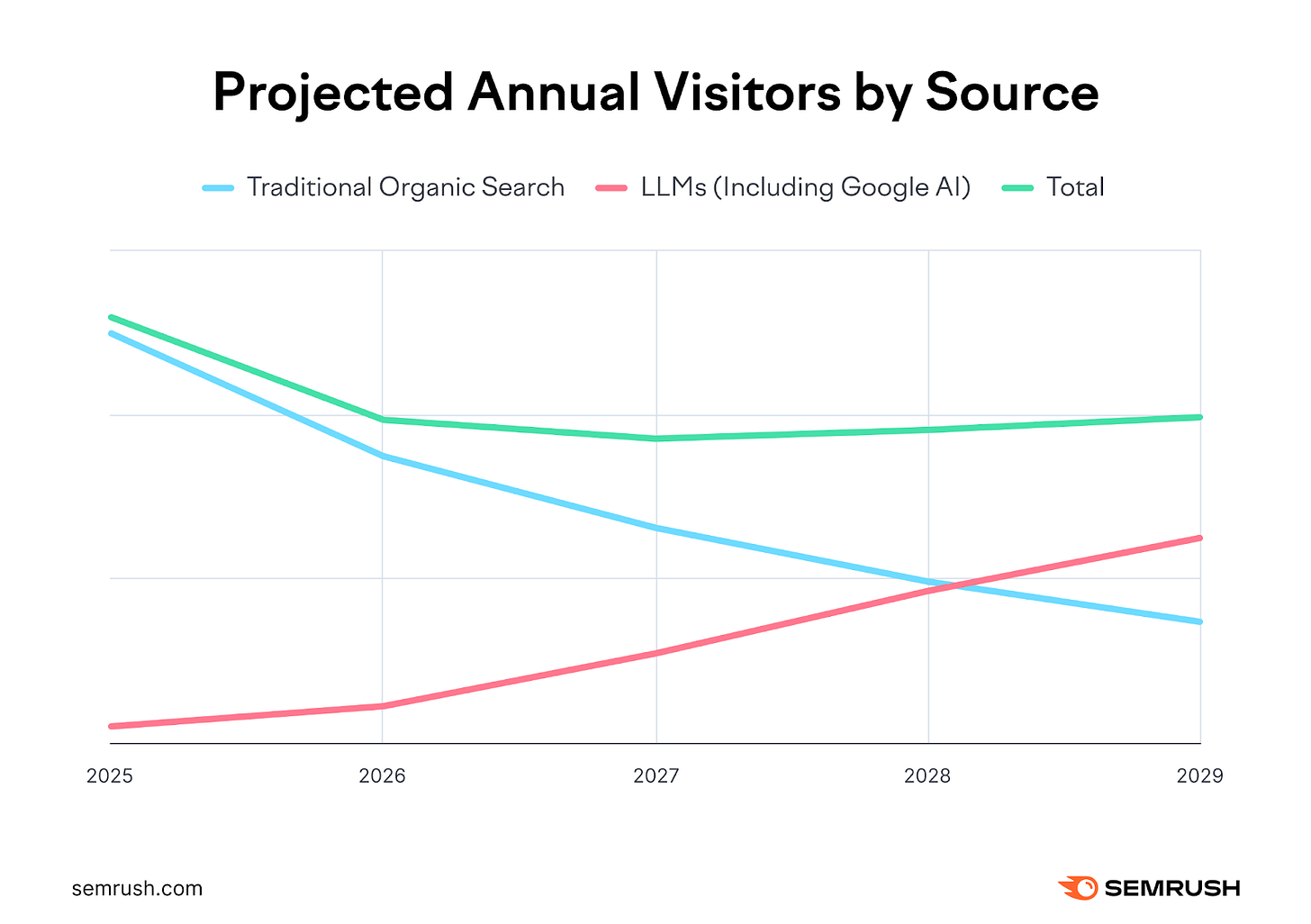

But people aren’t just staying in ChatGPT. Website referral traffic from ChatGPT is up 3,496% in the past year,4 and is expected to surpass Google by the end of 2028.5

It’s too early to know exactly how this will shake out, but one thing is clear: if your business depends on being discovered online, you can’t afford to ignore LLMs.

With this shift, a new discipline has emerged alongside traditional SEO: generative engine optimization (GEO). It’s the practice of structuring content to show up in AI-generated answers across tools like Google’s AI Overviews, ChatGPT, and Perplexity. The most forward-thinking marketers have already been experimenting with GEO for years.

Today, I’ll walk you through how I’ve been updating my own content strategy to align with how LLMs find, interpret, and cite information.

How I Updated My Content Strategy for GEO

The rise of LLM-powered search hasn’t made SEO obsolete, but it has changed how organic visibility works. If you want your content to show up in AI Overviews, ChatGPT responses, or Perplexity citations, ranking on Google alone isn’t enough anymore.

I didn’t throw out my SEO content optimization strategy, but I did make a series of small, deliberate changes to account for how LLMs retrieve, summarize, and cite information. The core principles are still the same: write clearly, be useful, go deep. But when you want to show up in AI-generated answers, the edge details matter.

Here’s what I’ve changed, and why.

1. Questions Over Keywords

I haven’t stopped using keyword research; it remains effective since most searches still occur on Google (at least for now). Plus, knowing which topics have high demand remains essential for shaping your content roadmap.

But I’m no longer only chasing high-volume keywords.

A study by SemRush found that the average ChatGPT prompt is 23 words, with some going as high as 2,717 words.6 Because of this, I’m increasingly willing to target long-tail and even “zero volume” keywords: the kinds of highly specific queries that don’t appear in keyword research tools, but do reflect how people actually ask questions of LLMs.

Even if a keyword shows “zero volume”, I’ll still write about it when:

I see it phrased similarly across multiple platforms (Reddit, Quora, Discord, etc.)

It reflects a question that readers or clients have actually asked me.

It connects clearly to a higher-level topic cluster I want to own.

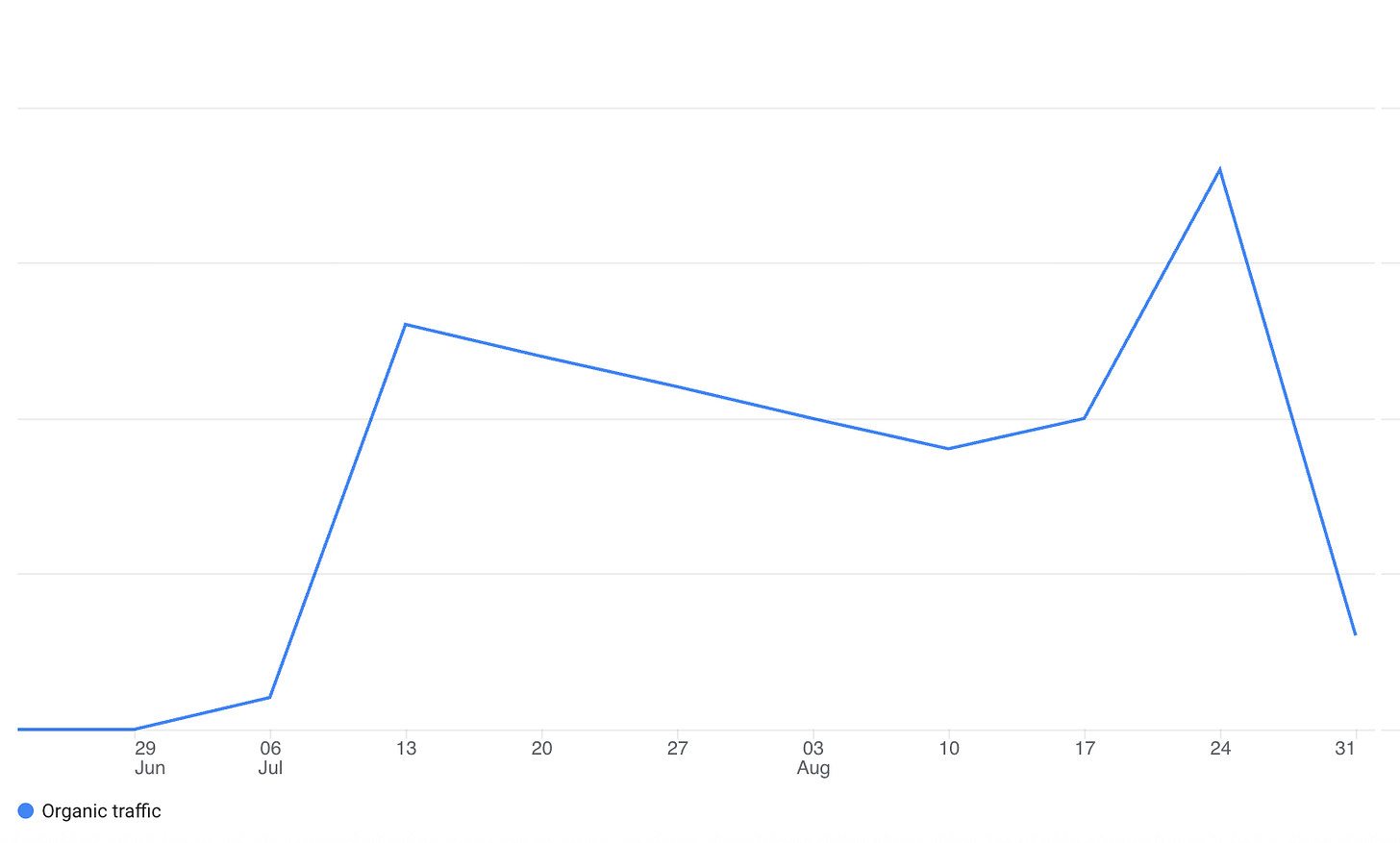

In that case, I don’t care what the volume says if I know it’s a real question that real people ask. In fact, I’ve repeatedly seen topics over the past year that just felt right despite showing zero search volume and which still drive meaningful organic traffic for both me and my clients.

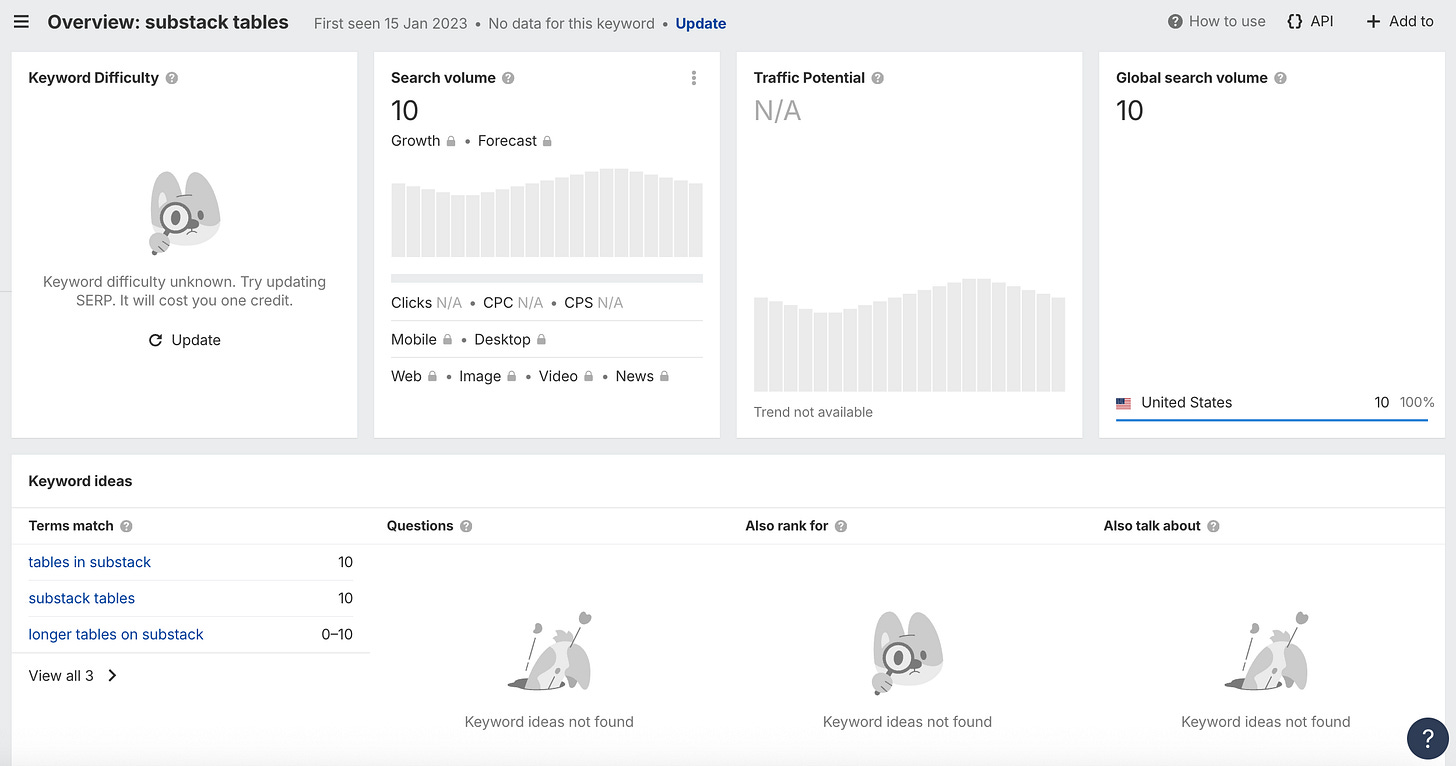

For example, although there doesn’t appear to be much search volume for keywords related to how to add tables to Substack, it was a problem I had struggled with myself and I just knew that many other Substack authors must be asking the same question.

So, I decided to write about it anyway, and I’m not surprised that it started generating consistent organic traffic almost immediately after being published on July 11, 2025.

2. Answer First, Expand Later

When optimizing for SEO, it’s common to build context first: hook the reader, establish authority, and then provide the answer.

But LLMs work differently. They prioritize clear, concise answers upfront, the kind that are easy to cite directly in a summary. That’s why I’ve changed how I structure posts: I front-load the answer, then add depth and nuance further down the page.

Here’s how that plays out:

I start with a clear definition, step-by-step list, or framework right at the top.

I use subheadings that echo real questions:

“What is Generative Engine Optimization?”

“How Do LLMs Choose Which Content to Cite?”

I avoid burying key information in long intros or tangents.

Then, once the core answer is established, I expand:

Add supporting context, examples, and caveats.

Link out to related resources or related posts.

Include narrative or opinion further down the page, where humans still scroll.

This structure makes it easier for LLMs to cite you, while still giving human readers everything they need.

3. Anchor Content to Entities

One of the biggest differences between traditional search and LLM-based search is how information is understood. Google’s algorithm matches keywords and links. LLMs rely more heavily on entities: people, places, concepts, tools, and organizations that are explicitly named and consistently referenced across the web.

So I’ve started writing with entity recognition in mind. That means:

Clearly naming companies, tools, and concepts early in the piece.

Using consistent terminology across related posts.

Linking to authoritative sources like Wikipedia, Statista, official documentation, or my own explainers.

Including short, in-context definitions (e.g., “Generative Engine Optimization (GEO) is the practice of structuring content to appear in LLM-generated answers…”)

For example, if I’m writing about Substack, I’ll incorporate as many relevant details as possible, including:

When Substack started, and who its founders and investors are.

Features like Substack Notes or Recommendations.

Related competitors like Beehiiv, Ghost, or Mailchimp.

Core concepts like “Substack pricing,” “newsletter growth,” or “paid conversion rates”.

This gives the model more surface area to connect the dots and makes it easier for your content to be cited when someone prompts a question about that topic.

My hero post for The Substack Shortcut is a real-world example of how I am linking all of this content and building my authority on the entity.

Think of it like helping the model build a mental map: the more clearly you anchor your content to recognizable entities, the more likely it is to surface when those entities come up in a prompt.

4. Think in Clusters, Not One-Off Posts

LLMs don’t just look at a single page, they learn patterns across sources. When you publish multiple high-quality posts around the same topic, you’re effectively training the model to associate you with that subject.

That’s why I’m more focused than ever on topic clusters: tightly related groups of content that reinforce each other.

Here’s what that looks like in practice:

I publish multiple pieces on a single concept from different angles.

For example, I’ve already published a primer on GEO, so today I’m focused on one part of GEO: content strategy.

I create internal links between those posts to strengthen the semantic connections.

I’ve already linked to my GEO primer above, and I’ll also be adding an internal link from it back to this article.

I cover topics from multiple angles and at multiple levels of granularity.

Last week, I focused on introducing the topic and providing an objective overview of how GEO works. Today, I am going much deeper into one subtopic and sharing my personal experience as I do.

This structure does two things:

It boosts human understanding: readers can go deeper and stay longer.

It builds model association: LLMs are more likely to surface your content as a reliable source on that topic.

The more ways you show up around a topic, the more likely you are to be remembered—by both people and machines.

5. Prioritize Original Data

Most content online looks the same to LLMs: summarized, derivative, or pulled from common sources. But when something is original, concrete, and grounded in verifiable information, it stands out. That kind of content is far more likely to be cited.

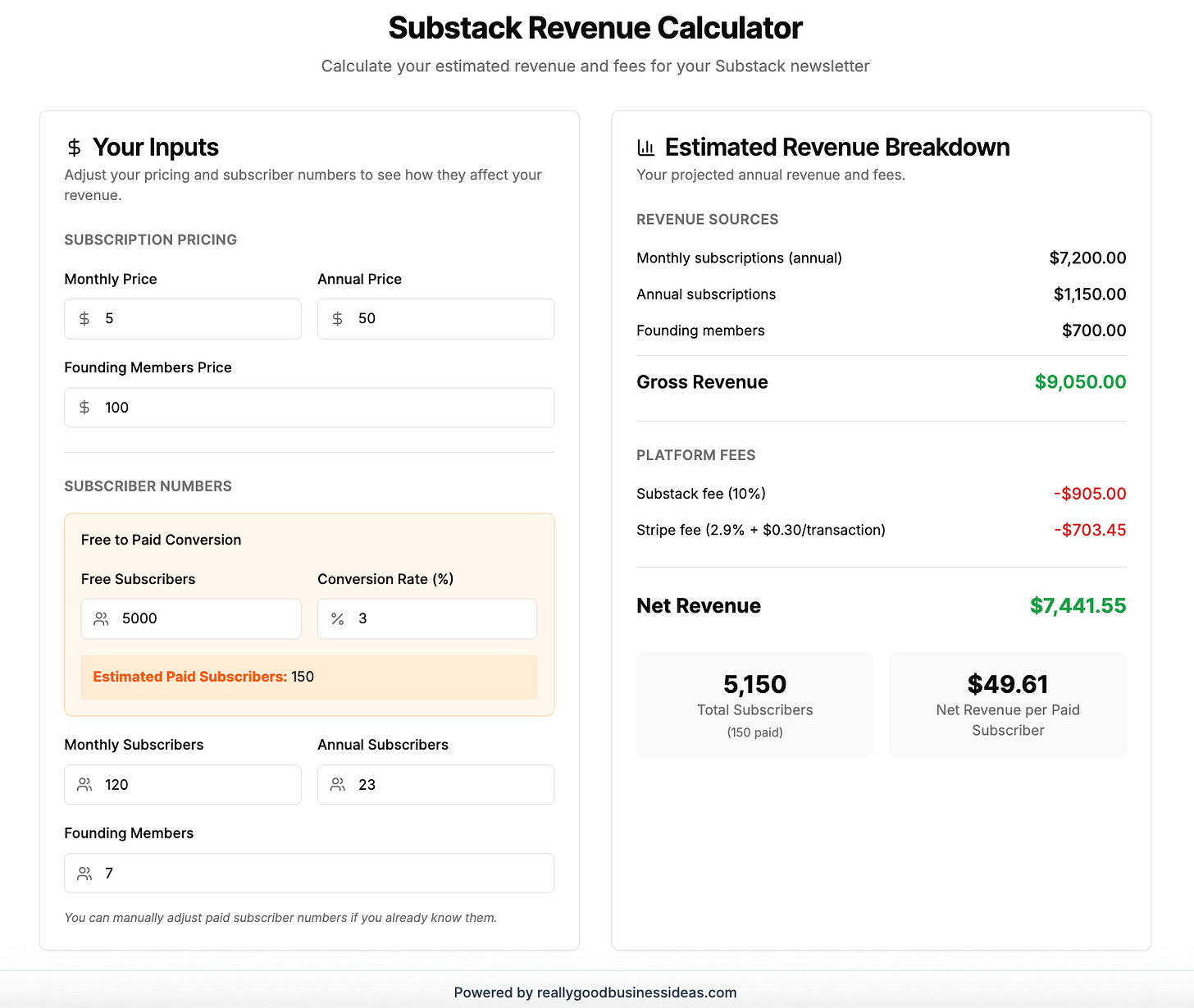

That’s why I try to include original data, frameworks, or analysis whenever possible. For example, I used public reports to estimate the average Substack paid subscriber conversion rate and incorporated it into a Substack revenue calculator and a model to estimate how much the top Substack publications earn.

If a model has to choose between citing yet another generic post or your post that includes real data, a custom framework, or a unique analysis, it’s more likely to choose you.

Note that this strategy doesn’t mean publishing massive studies or analyses. Success can be as simple as:

Sharing behind-the-scenes metrics or experiments.

Running a quick reader poll.

Synthesizing insights into a new format or visual.

Naming a repeatable process or mental model.

Content that’s original, concrete, and verifiable helps LLMs trust you and cite you.

Keep Putting Human Readers First

Even as I adapt for LLMs, I haven’t stopped writing for humans. In fact, the two strategies aren’t in conflict; if anything, they reinforce each other.

LLMs are trained on content that real people find useful, link to, and share. So if your post resonates with readers, there’s a good chance it’ll signal value to the models as well.

That’s why I still focus on:

Strong headlines that make people want to click or share.

Clean, readable formatting that’s easy to skim.

Clear takeaways that people can apply immediately.

Voice and POV, especially in sections where nuance or lived experience matters.

I’ll also write content that isn’t necessarily optimized for search, but still helps grow my brand, reach a new audience, and solve real problems for my readers. Those posts might not show up in a Google AI Overview, but they deepen the relationship with actual readers, and that still matters.

For example, it’s unlikely anyone is searching for a story about how I vibe-coded the latest version of my personal website, but writing about it gave me a chance to show my readers a real-world example of vibe coding while sharing more of my personal brand.

At the end of the day, LLMs don’t buy your product, subscribe to your newsletter, or tell their friends about your work. People do.

Small Changes That Matter

Most of the shifts I’ve made to my content strategy for GEO are subtle. I’m not reinventing everything, I’m simply tweaking how I choose topics, structure posts, and signal value to both machines and human readers.

But in a landscape where 20% of Google searches now trigger AI Overviews, and LLM-driven discovery is growing faster than anyone expected, these small changes can make a big difference.

You don’t need to abandon traditional SEO (seriously, please don’t!). But you do need to start thinking about how your content shows up when a user asks a model a question. That’s what GEO is really about.

I’ll be sharing more in the coming weeks on everything from how to build authority to tools for measuring LLM visibility. If you want to stay ahead of this shift, subscribe to access to these resources. Premium subscribers can also receive one-on-one support from me to adapt their content strategy through Substack chats and office hours.

To endless possibilities,

Casandra

As I'm studying SEO, this has completely changed my perspective on everything!!

What about paywalls? Or auto paywalls that kick in on archived posts. Bad idea for GEO?